The Mills cluster, UD’s first Community Cluster, was deployed in 2012 and is a distributed-memory, Linux cluster. It consists of 200 compute nodes (5160 cores, 14.5 TB memory, 49.3 peak Tflops). The nodes are built of AMD “Interlagos” 12-core processors, in dual- and quad-socket configurations for 24- and 48-cores per node. A QDR InfiniBand network fabric supports the Lustre filesystem (approx 180 TB of usable space). Gigabit and 10-Gigabit Ethernet networks provide access to additional Mills filesystems and the campus network. The cluster was purchased with a proposed 5 year life, putting its retirement in the October 2016 to January 2017 time period.

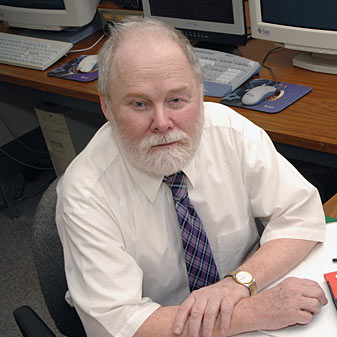

The cluster was named to recognize the significant scientific and engineering contributions of Prof. Emeritus David L. Mills, Dept. of Electrical & Computer Engineering, UD.

This cluster is currently unavailable for purchase

Please see the Mills End-of-Life Plan and Polices document for complete details.

Provided Infrastructure

| Basic details |

|

|---|---|

| Network |

|

| Storage | Aggregate storage across the cluster:

Workgroups have unlimited access to Lustre scratch, plus:

|

| Login nodes | Users connect to the cluster through two login nodes:

|